Table of Contents

Ibisco system architecture

Introduction

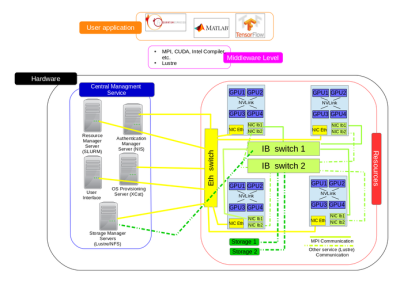

The architecture of the hybrid cluster of the IBiSCo Data Center (Infrastructure for BIg data and Scientific Computing) can be represented as a set of multiple layers. (Figure 1).

The lowest layer of the architecture consists of the hardware, characterized by calculation and storage nodes; in the upper level the application level, which allows users to submit their tasks.

The intermediate level of the architecture consists of the set of CUDA and MPI libraries which are capable of making the two levels communicate with each other. The Lustre distributed file system, which offers a high-performance paradigm of access to resources, also belongs this level.

To guarantee the correct working conditions of the cluster it was necessary to use a high-performance network. Therefore, InfiniBand technology was chosen.

Figure 1: Diagram of levels of the cluster

The hardware level

The cluster comprises 36 nodes and 2 switches, placed in 4 racks of the Data Center. They perform two functions: calculation and storage. To support the calculation there are 128 GPUs, distributed among 32 nodes (4 GPUs per node). To support storage 320 Terabytes are available distributed among 4 nodes (80 Terabytes per node).

As previously mentioned, to ensure access to resources and low-latency broadband communication between nodes, InfiniBand has been used. This technology provides Remote Direct Memory Access (RDMA) which allows direct access from a local system to a remote memory location (main memory or GPU memory of a remote node) without involving the CPUs in operations, thus reducing delay in the message transfer.

The peculiarity of the cluster lies in its heterogeneity, given by the coexistence of two technologies: InfiniBand and Ethernet. Despite the advantages of InfiniBand, Ethernet cannot be eliminated since it is essential for maintenance operations, monitoring the infrastructure, and ensuring access to resources outside the cluster.

The Storage Node Architecture

The cluster has 4 Dell R740 storage nodes, each offering 16 16TB SAS HHDs and 8 1.9TB SATA SSDs. Each node is equipped with two InfiniBand EDR ports, one of which is connected to the Mellanox InfiniBand switch dedicated to storage, which guarantees a 100 Gb/s connection to all the compute nodes.

While the aforementioned nodes are dedicated to the home and scratch areas of users, a separate 3 PB storage system will then be available. It will be accessible via Ethernet and can be used as a repository where users can move data to and from the storage systems connected to the InfiniBand when they need large amounts of data over the time span of their job. As for the Ethernet network, each node is equipped with 2 ports at 25 Gb/s for connection to the Data Center core switch.

The Compute Node Architecture

The cluster compute nodes are 32 Dell C4140s, each equipped with 4 NVIDIA V100 GPUs, 2 Ethernet ports at 10 Gb/s each, 2 InfiniBand ports at 100 Gb/s each, 2 Intel Gen 2 Xeon Gold CPUs, and 2 SATA disks 480 GB solid-state. Each node is also equipped with 22 64 GB RAM memory modules, overall 1.375 TiB (1408 GB). Each GPU is equipped with 34 GB RAM memory. The nodes can be divided into 3 sub-clusters. In Table 1 below are shown the hardware features peculiar to each sub-cluster.

| Sub-Cluster | Number of nodes | Infiniband NIC Type | Processor Type | |

|---|---|---|---|---|

| 1 | 21 | Mellanox MT28908 [ConnectX-6] | Intel Xeon Gold 6240R CPU@2.40GHz | 48 |

| 2 | 4 | Mellanox MT27800 [ConnectX-5] | Intel Xeon Gold 6230R CPU@2.10GHz | 56 |

| 3 | 7 | Mellanox MT27800 [ConnectX-5] | Intel Xeon Gold 6230R CPU@2.10GHz | 56 |

Table 1: Hardware specifications

NVLink and InfiniBand: High-performance interconnections for intra and inter-node GPU communications

One of the goals of the cluster is to make the infrastructure scalable based on application requirements. C4140 servers are available with both PCIe 3.0 and NVLink 2.0 communication channels, enabling GPU-CPU and GPU-GPU communications over PCIe or NVLink respectively.

When the application only needs CPU, parallelism is guaranteed by the presence of the two CPUs available on each C4140 node. When the application requires GPU parallelism, it is recommended to use a more efficient interconnection system than the more common PCIe bus. Compared to PCIe 3.0, NVLink 2.0 uses a point-to-point connection which offers advantages in terms of communication performance. While the maximum throughput of the PCIe 3.0 bus link is 16 GB/s, NVLink reaches 25 GB/s. However, being a point-to-point architecture the total bi-directional bandwidth is 50 GB/s. In our specific case, each V100 GPU has 2 links to all other GPUs for a total of 6 links up to 300 GB/s as aggregate bidirectional bandwidth (see Figure 2). When applications require parallelism of more than 4 GPUs it is necessary to provide efficient inter-node communication technology, such as InfiniBand.

The next section describes the middleware needed to enable these communication models. InfiniBand RDMA technology ensures that data is not copied between network layers but transferred directly to NIC buffers; applications can directly access remote memory, without CPU involvement, ensuring extremely low latency, in the order of magnitude of hundreds of nanoseconds.

Figure 2

HPC cluster network as combination of Ethernet and Infiniband technologies

The combination of High-Throughput Computing (HTC) with the High-Performance Computing (HPC) one is an important aspect for an efficent cluster network. Even if Infiniband technology is characterized by its low latency, not all applications benefit from such a high performance network because their workflow could make limited use of interprocess communication. Therefore is useful to have also Ethernet network intecconnections, allowing an application to exploit the technology more suitable for its needs. To have this versatility, our cluster implements an hybrid Infiniband-Ethernet network. It is imperative that the network traffic is guaranteed even in the event of a failure of a network device: to satisfy this demand, there two equal switch and each connection to the first switch is replicated to the second one. In case of the first switch, an automatic failover service enable the connections via the second one. Finally the LAG Ethernet protocol is used to improve the global efficency of the network: the result is the doubling of the bandwidth when both switches are active. With this aim in our framework some Ethernet switches mod. Huawei CE-8861-4C-EI are linked in a ring topology via 2×100 Gb/s stack links. Then we use the protocollo Link Aggregation Group multi-chassis (mLAG) to aggregate the 25 Gb/s ports. To guarantee high efficiency also for etherogenous applications workflows we implemented two Infiniband networks dedicated to different tasks: one for interprocess communication and the other for data access. Each network use a Mellanox SB7800 switch.

The Middleware level

To allow efficient use of the technologies described, it is necessary to provide a software layer that supports communication between the layers.

The Message Passing Interface (MPI) is a programming paradigm widely used in parallel applications that offers mechanisms for interprocess communication. This communication can take place in different forms: point-to-point, unilateral or collective. The MPI standard has evolved by introducing support for CUDA-aware communications in heterogeneous GPU-enabled contexts, thus enabling optimized communication between host and GPU. For this reason, modern MPI distributions provide CUDA-aware MPI libraries with the Unified Communication X (UCX) framework. The middleware installed on our cluster is based on a combination of the following technologies:

- OpenFabrics Enterprise Distribution (OFED) for drivers and libraries needed by Mellanox InfiniBand NICs.

- CUDA Toolkit for drivers, libraries and development environment required to take advantage of NVIDIA GPUs.

- The CUDA-Aware implementation of MPI OpenMPI through the UCX framework.

- A Lustre distributed file system to access storage resources with a high performance paradigm.

The process communication sub-layer

Moving data between processes continues to be a critical aspect of harnessing the full potential of a GPU. Modern GPUs provide some data copying mechanisms that can facilitate and improve the performance of communications between GPU processes. In this regard, NVIDIA has introduced CUDA Inter-Process Copy (IPC) and GPUDirect Remote Direct Memory Access (RDMA) technologies for intra- and inter-node GPU process communications. NVIDIA has partnered with Mellanox to make this solution available for InfiniBand-based clusters. Another noteworthy technology is NVIDIA gdrcopy, which enables GPU-to-GPU inter-node communications optimized for small messages, overcoming the significant overhead introduced in exchanging synchronization messages via the rendevous protocol for small messages. The UCX framework is provided to combine the aforementioned technologies with communication libraries (eg OpenMPI). This framework is driven by the UCF Consortium (Unified Communication Framework) which is a collaboration between industries, laboratories and universities to create production-grade communication frameworks and open standards for data-centric, high-performance applications. UCX claims to be a communication framework optimized for modern, high-bandwidth, low-latency networks. It exposes a set of abstract communication primitives that automatically use the best hardware resources available at execution time. Technologies supported include RDMA (both InfiniBand and RoCE), TCP, GPU, shared memory, and atomic network operations. In addition, UCX implements some best practices for message transfer of all sizes, based on the experience gained while running the application on the largest datacenters and supercomputers in the world.

Table 2 lists the software characteristics of the nodes in the clusters.

| OS | InfiniBand Drive | CUDA Driver & Runtime Version | NVIDIA GPUDirect-RDMA | NVIDIA gdrcopy | UCX | MPI Distribution | BLAS Distribution |

|---|---|---|---|---|---|---|---|

| Linux CentosOS 7 | NVIDIA MLNX_OFED 5.3-1.0.0.1 | 11.1 | 1.1 | 2.2 | 1.10.0 | Open MPI 4.1.0rc5 | Intel MKL 2021.1.1 |

Table 2: Software Specifications

The Lustre Distributed File System

As stated earlier, a key aspect in high-performance computing is the efficient delivery of data to and from the compute nodes. While InfiniBand technology can bridge the gap between CPU, memory, and I/O latencies, storage components could be a bottleneck for your entire workload. The implementation adopted in our hybrid cluster is based on the use of Lustre, a high-performance parallel and distributed file system. High performance is ensured by Lustre's flexibility in supporting multiple storage technologies, from common Ethernet and TCP/IP-based to high-speed, low-latency such as InfiniBand, RDMA and RoCE. In the Lustre architecture, all compute nodes in the cluster are clients, while all data is stored on Object Storage Target running on the storage nodes. Communications are handled by a Management Service (MGS) and all metadata are stored on Metadata Target (MDT).

In the implemented Lustre architecture (see Figure 3), both Management Service and Metadata Service (MDS) are configured on a storage node with Metadata Targets (MDT) stored on a 4-disk RAID-10 SSD array. The other 3 storage nodes host the OSTs for the two file systems exposed to Lustre, one for the home directory of the users and one for the scratch area of the jobs. In particular, the home filesystem is characterized by large needs for disk space and fault tolerance, so it is composed of 6 MDTs stored on an array of 3-disk SAS RAID-5 HDDs of 30 TB each. On the other hand, the scratch area is characterized by fast disk access times without the need for redundancy. Therefore, it is composed of 6 MDTs stored on 1.8 TB SSD disks.

Figure 3: The implementation of the Lustre architecture